AI, Machine Learning Enhance Track Inspection

Written by Sperry Rail

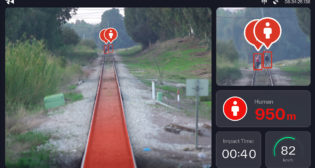

Elmer is now in use on Sperry’s entire North American non-stop track monitoring fleet, including ATS rail-road vehicles.

Sperry Rail has been active in non-destructive testing for rail faults for more than 90 years. In a drive to deliver greater efficiency in track monitoring, the supplier has developed Artificial Intelligence (AI) and machine learning applications that it is now installing in regular service.

As demand for track access grows, infrastructure managers from around the world face a daily battle to keep their railways operational during limited maintenance intervals.

Predictive and preventative rather than interval-based maintenance is the Holy Grail for infrastructure managers. AI and machine learning applications are increasingly facilitating these strategies. Sperry’s solution, Elmer, named after Dr. Elmer Sperry, the company’s founder, is an AI system that relies on neural networks—a set of algorithms designed to recognize patterns—to identify crack signatures in rail scans.

Sperry’s vehicle-mounted ultrasound, induction and vision systems record hundreds of miles each day, generating vast quantities of data that are now analyzed using a scalable cloud computing solution running the Elmer neural networks. Since July 1, 2019, all of Sperry’s non-stop fleet in North America has been processed using Elmer while additional North American as well as European, African and South American clients are set to benefit as their systems become compatible with Elmer in the future.

The technology, including AI, neural networks and training data, was all developed in-house. The overall objective is to minimize the number of possible suspect points identified on the track. This will reduce the amount of information presented to analysts, who will continue to make the final choice of identifying the false positives before sending the suspected faults out for validation on the track itself. By focusing the attention of analysts on fewer suspect points, the intention is to reduce the risk of error and the amount of time people spend inspecting track. It is also possible for Elmer to target large and priority faults.

Challenge

In developing Elmer, Sperry faced the challenge of separating non-flaw detections from genuine flaw detections. This is currently done with a rules-based pre-processing system that highlights potential flaws, which are then assessed by the human analysts and identified in the field.

The neural network approach was influenced by methods used in medicine, where networks classify features in scanned images. Figure 1 shows a schematic of a composite neural network that has been developed to process one or more sets of transducer inputs through a range of deep Convolutional Neural Networks (CNNs).

The CNNs identify patterns of key features in the signal and then combine this feature knowledge within a fully connected layer. This layer can optionally be fed into a recurrent neural network made up of Long Short-Term Memory cells (LSTMs), which take account of the local data context within the wider data stream, such as the presence of other nearby features.

The final output layer classifies the input dataset, identifying if it is a known feature. The structure is implemented in TensorFlow, the open-source AI mathematics library developed by Google. For different feature detections, paths into the neural network are turned on or off depending on which signals the network can learn from. For example, bolt hole crack (BHC) detection uses only the ultrasound inputs; transverse defect detection can use ultrasound and induction; surface damage and welds use the eddy current signal.

To detect BHCs, it is necessary to find several thousand examples of ultrasound signatures of good bolt holes and cracked bolt holes, as well as examples of other features that are not of interest but that the neural network must be trained to ignore.

Figures 2 and 3 show examples of benign bolt holes and cracked bolt hole signatures, respectively, with colors representing different transducer reflections. There is a huge variation in the ultrasound signatures, but they follow a number of basic patterns. This situation is typical of a problem that is very hard to define with rules but can work well with neural networks if there is enough variety in the training examples.

Once the neural network is trained, it must be validated using data to which it has not previously been exposed. This helps to ensure that the knowledge is generalized and is not picking up non-general features of the set examples.

Having passed validation, the neural network can be used to make predictions for the location of features in each of the day’s recordings. The recordings pass through the neural network using overlapping windows of data and are marked depending on what it is predicted to contain.

Figure 4 shows an example of a few meters of ultrasound data from a rail. The colored stripes indicate what the neural network predicted when the data window was under the cross hairs. Green is used for BHCs (such as currently under the crosshairs); red marks rail end detection; blue marks good bolt holes; yellow marks bond pins. There will usually be a number of repeat indications as a feature passes through the neural network window, including occasional false indications. These are used to vote for the recognition that will be reported at a location. With a well-trained network, false positives are reduced by a factor of three or more.

Transverse Defects

Just like in the bolt hole case, thousands of examples were required to train a network to detect transverse defects (TDs). In this case, there were a number of items with transverse signatures, most of which were benign, but some of which were flaws. In the classic rules-based recognizer these could not be separated so all were indicated as potential suspects. This results in a large amount of work for a human analyst to remove the benign indications.

The benign indications are often easy for a human to recognize, but because of the variety, difficult to define with rules. This situation is good to address with neural networks. It was decided to break down the transverse signatures into various categories of benign items and flaws. Each result from the neural network carries a confidence level that it was correctly categorized. This can be used to bias the likelihood of incorrect detections being either false positives or false negatives. The safest failure mode is to bias flaw detections to be more likely to give false positives (over-marking) and benign detections to be more likely to give false negatives (under-marking). With a well-trained network, it is possible to tune the balance to identify all significant TDs while reducing the amount of over-marking for the human analyst to consider.

Early results show that a well-trained network has the ability to categorize the indications, and this can be used to reduce the false positives by a factor of 10 or more by removing all benign-type indications. Furthermore, the “obvious” TD categories (large TDs, reverse TDs) can be confidently identified as critical defects. This leaves the more ambiguous small TDs and surface defects, which currently still rely on classic rules-based detection.

This approach of identifying the highest risk flaws accurately while removing the low risk features means that as the neural network is improved, the middle ground of more ambiguous items will be squeezed. A classic rules-based approach will continue to be used where appropriate—for example, to meet definition-based procedural actions defined by some railways.

Development of the system was followed by work on validation. A validation set was constructed where hundreds of flaws were mixed in a data set containing many thousands of non-flaws, with particular emphasis on indicators, which have similarities to flaws. This validation set is constantly updated with new examples, including any false identifications, to make sure it is representative of what might be found when the network is in use. Whenever a neural network is trained with new data, it is run against the validation set, and the results are compared with previous versions.

Current development work is focused on three areas: improving the scope of the AI to eventually offer comprehensive analysis of all fault types; building a Big Data infrastructure, which is essential to manage the vast amounts of data used in AI projects; and improving the scope and robustness of the processing pipeline to manage the throughput to high internal service level agreements.

“It is important to realize that the level of engineering required to convert an AI/machine learning proof of concept to a successful product or process is an order of magnitude more challenging than an equivalent software-only project,” says Sperry Vice President Global Business Development Robert DiMatteo “As a result, Sperry puts a significant emphasis on ensuring the system is robust and that AI’s continual learning is easy and auditable.”

Sperry has developed proofs of concept that analyze eddy current data to find features, as well as prototypes that can extract features from induction sensors. These two incremental technologies offer a broad-scope view of the rail, and permit enhanced data analysis that will ultimately be rolled into the Elmer process. Ultimately, these systems, and possible vision systems, will support a combined assessment of the rail, where each system can enhance the results of the other.